Abstract

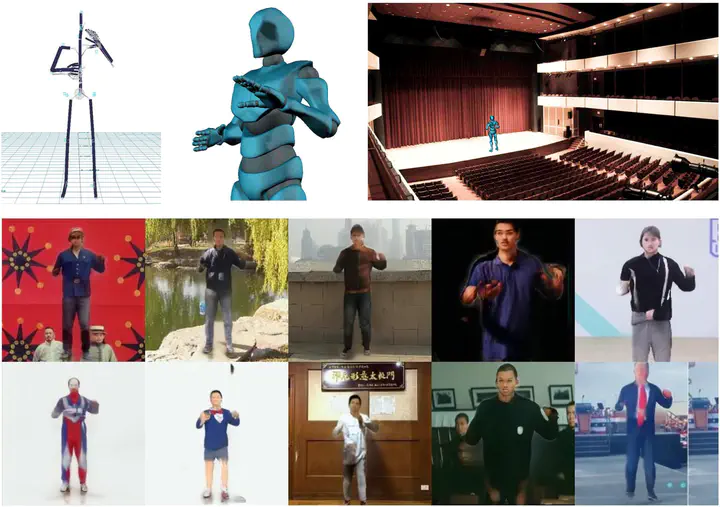

In this demo, we present the VirtualConductor, a system that can generate conducting video from a given piece of music and a single user’s image. First, a large-scale conductor motion dataset is collected and constructed. Then, we propose an Audio Motion Correspondence Network (AMCNet) and adversarial-perceptual learning to learn the cross-modal relationship and generate diverse, plausible, music-synchronized motion. Finally, we combine 3D animation rendering and a pose transfer model to synthesize conducting video from a single given user’s image. Therefore, any user can become a virtual conductor through the VirtualConductor system.

Type

Publication

In IEEE International Conference on Multimedia and Expo (ICME) 2021, demo track. [ArXiv]

This demo won ICME 2021 Best Demo award.